Dive Brief:

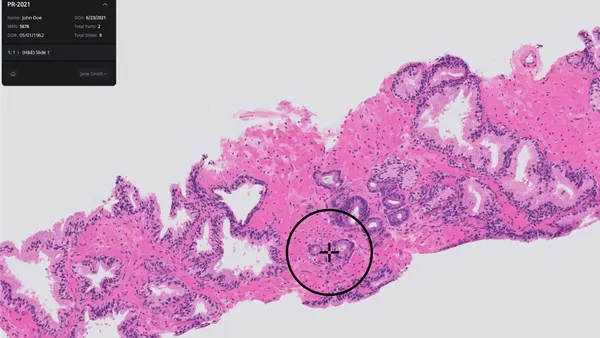

- Academics are urging the Food and Drug Administration to create a regulatory process to prevent AI-driven software as a medical device (SaMD) from exacerbating health disparities.

- Writing in the Journal of Science Policy & Governance, the researchers warn that “AI-driven tools have the potential to codify bias in healthcare settings” but note the FDA lacks regulations to examine bias in AI healthcare software.

- The researchers want the FDA to create a distinct regulatory process for AI devices and to appoint a panel of experts in “algorithmic justice and healthcare equity” to develop bias benchmarks and requirements.

Dive Insight:

Because AI is trained on existing data, it can reflect and potentially amplify the problems in those data sets, including a lack of information about the ways that many diseases affect groups that are traditionally underserved by advanced medicine. Other researchers have already encountered such issues in algorithms that under-diagnose lung disease in minority populations and give more resources to white patients.

The U.S. lacks dedicated healthcare AI regulations that address those risks. Researchers at the University of Pennsylvania and Oregon Health & Science University want that to change and have set out several paths forward in their paper.

The team’s preferred option is for the FDA to create a new regulatory process and a panel that will “review all AI-driven SaMD functions to ensure that they demonstrate a low chance of exacerbating existing health disparities.” Proposed requirements include the evaluation of training and testing datasets, reporting on existing disparities, and metrics to evaluate device accuracy across groups.

Rather than being spread across 510(k), de novo and premarket approval, AI-enabled SaMD would be assessed under the dedicated process. The researchers argue the switch could reduce the time taken to assess AI by one month, but they acknowledge there are barriers to the approach.

“This policy option would require a significant amount of funding and effort from both FDA and SaMD developers,” the researchers wrote. “Specific methods of ensuring unbiased output may be difficult to achieve -- for example, diversifying data sets through more open sharing of data is inhibited by privacy and proprietary concerns. This may hinder approval of devices and dissuade small businesses with fewer resources to overcome regulatory burdens from developing AI-driven SaMDs.”