Dive Brief:

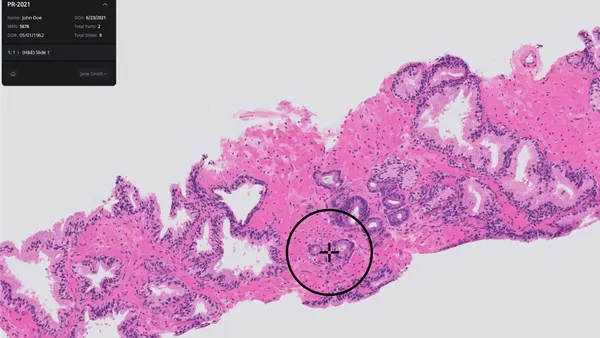

- FDA officials and the head of global software standards at Philips have warned that medical devices leveraging artificial intelligence and machine learning are at risk of exhibiting bias due to the lack of representative data on broader patient populations.

- The red flag came to the fore during Thursday's virtual Patient Engagement Advisory Committee meeting, with speakers emphasizing that social biases in the data used to train AI and machine learning algorithms could adversely impact care for a wide swath of patients and exacerbate health disparities.

- The meeting comes 18 months after FDA first proposed a regulatory framework for modifications to AI/ML-based software as a medical device using real-world learning and adaptation. However, the agency has yet to finalize its approach for addressing challenges to widespread use of the technology.

Dive Insight:

While AI and machine learning have the potential for transforming healthcare, the technology has inherent biases that could negatively impact patient care, senior FDA officials and Philips' head of global software standards said at the meeting.

Bakul Patel, director of FDA's new Digital Health Center of Excellence, acknowledged significant challenges to AI/ML adoption including bias and the lack of large, high-quality and well-curated datasets.

"There are some constraints because of just location or the amount of information available and the cleanliness of the data might drive inherent bias. We don't want to set up a system and we would not want to figure out after the product is out in the market that it is missing a certain type of population or demographic or other other aspects that we would have accidentally not realized," Patel said.

Pat Baird, Philips' head of global software standards, warned without proper context there will be "improper use" of AI/ML-based devices that provide "incorrect conclusions" provided as part of clinical decision support.

"You can't understand healthcare by having just a single viewpoint," Baird said. "An algorithm trained on one subset of the population might not be relevant for a different subset."

Baird gave the example of a device algorithm trained on one subset of a patient population that might not be relevant to others such as at a pediatric hospital versus a geriatric hospital. Compounding the problem is that sometimes patients must go to hospitals for care other than local medical facilities. "Is that going to be a different demographic? Are they going to treat me differently in that hospital?" as a result of the bias, Baird posed.

Terri Cornelison, CDRH's chief medical officer and director of the women's health program, also made the case for representation of diverse patient groups in data sets used to train AI/ML algorithms.

"In many instances, the AI/ML devices may be learning a worldview that is narrow in focus, particularly in the available training data, if the available training data do not represent a diverse set of patients. Or simply, AI and ML algorithms may not represent you if the data do not include you," Cornelison said, noting that most algorithm designs ignore the sex, gender, age, race and ethnicity impacts on health and disease for different people.

Failing to account for these distinctions in AI/ML training datasets and the lack of representative samples of the population in the data results in bias that leads to "suboptimal results and produces mistakes."

Time is of the essence in FDA finalizing an AI/ML regulatory framework that addresses the ongoing issues of social biases. CDRH Director Jeff Shuren noted digital health technology has soared over the past decade with diagnostics and therapeutics being used in medical care, including at home by patients.

"This software is increasingly being developed with artificial intelligence including machine learning capabilities," said Shuren, who pointed to last year's FDA AI/ML discussion paper, which he said could ensure the safety and effectiveness of select changes to SaMD without the need for FDA review.

That paper made the case that fast-changing AI and machine learning technology requires a new total product lifecycle regulatory approach that facilitates a "rapid cycle of product improvement" and enables devices to "continually improve while providing effective safeguards." However, FDA has yet to finalize it proposed framework.

The problem with the FDA's current regulatory framework is it's not designed for adaptive algorithms. FDA has only cleared or approved medical devices using "locked" algorithms, which provide the same result each time the same input is applied to it and does not change.

Nonetheless, Patel contends the FDA's proposed total lifecycle approach when finalized will ultimately "provide the level of trust and confidence to the users, at the same time leveraging transparency and premarket assurance, as well as ongoing monitoring of those products that are learning on the fly."